ImagingLab Robotics Library for DENSO Reference Guide

Overview

Contents

Introduction

This reference guide introduces the basic concepts of the ImagingLab Robotics Library for DENSO with examples to serve as a starting point for programming your own DENSO robotics and vision-guided system. Learn how to use the ImagingLab Robotics Library for DENSO and explore single-point moves, blended moves, and vision-guided applications. The DENSO robot should be operational with the teaching pendant to get the most out of the example code, which is attached at the bottom of this guide. Note that this reference guide is a configurable example – not a turnkey application – and does not introduce every aspect of the ImagingLab Robotics Library or DENSO robots. If you are developing a complicated robotics system, consider consulting an NI Partner.

Configuring Your System

Robslave

To switch the robot into a slave state that you can control with NI LabVIEW software, you must load and start a DENSO program called robslave.pac, which comes with the DENSO b-CAP software on the robot controller. Use the DENSO software to download robslave.pac to the controller. After connecting to the controller, use the Denso-Robslave VI to start the program and to stop the program when exiting the LabVIEW application.

Allowing Ethernet Control

All of the robot commands from LabVIEW are sent over an Ethernet connection to the robot controller. The robot controller allows control from only one IP address, which you must add to the controller as a valid client. To do this on the teaching pendant, navigate to Settings»Communications Setting»Client. With this menu, you can change the IP address to match the IP address of the PC or real-time device that you are connecting to the robot. You also must set the robot to receive commands over Ethernet. Navigate to Settings»Communications Setting»Ext Run on the teaching pendant and select Ethernet as the communication port from which to run programs. The Ethernet port must also have Read/Write permissions, which are set in Settings»Communications Setting»Permit.

Wiring

To control the DENSO robot with LabVIEW, you must turn the teaching pendant’s key to Auto and turn on the Enable Auto lines on the Safety I/O port. In addition, you must connect the Stop all Steps input of the min-I/O connection on the DENSO controller, or the step-stop line, to a 24 V supply. This can be an external 24 V power supply, or you can change the jumpers within the controller to have the 24 V be supplied internally. Refer to the DENSO manual for more information.

Initialization

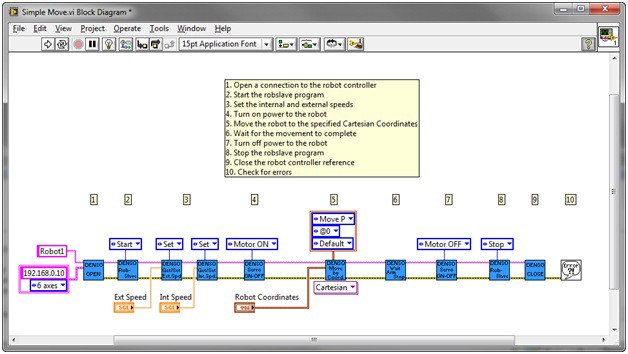

Connecting to the Robot Controller

The first step in programming a DENSO robotics application is the initialization of the robot and its parameters. Open a connection to the robot controller by using the Denso-Open VI and specifying the IP address of the controller and how many axes the robot has. To find the IP address of the robot’s controller, navigate to Settings»Communications Settings»Address on the DENSO teaching pendant. Note that you must add the IP address of the PC or real-time target connecting to the robot controller as a valid client in the robot’s communications setting menu as mentioned above. The Denso-Open VI options for number of axes are 6, 5, 4, and Undefined. You do not need to differentiate between different models of each robot that have the same number of axes. For example, you can run some programs written for a VS series six-axis robot using a VP series six-axis robot without changing any LabVIEW code.

Setting Speeds

You can set two different robot speed types within LabVIEW: external and internal. External speed is most often used to control the variations between moves once deployed. For instance, the robot may need to move at a slower speed while assembling a part, but it can move at a faster speed when returning to pick up another part. After the robot has placed the part or before it picks up a part, simply call the external speed VI to set the current desired speed. Internal speed is commonly used for testing purposes. If a test needs to be completed at 25 percent speed, you can set the internal speed at 25 and all the velocities across the robot’s movements are scaled back to 25 percent of their value. Otherwise, you need to go into the code and set each speed to be 25 percent of its original value. This can be tedious because some applications require a large variety of speeds for various movements. For safety, set the internal and external speeds after starting robslave.pac to ensure the robot moves at the correct speed each time you run the program.

Power On/Off

After opening a connection to the robot controller, power is not turned on to the robot until you call the Denso – Servo – Set ON-OFF VI. Call this VI with the motor status input set to Motor On before sending any move commands and change the motor status input to Motor Off after all movement is completed.

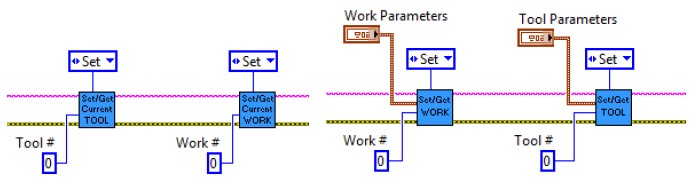

Work and Tool

With DENSO robots, the user can set the current tool, or end effector, which is attached to the end of the robot. With this feature, changing the end effector does not require a complete change in programmed positions. Instead, this feature automatically offsets the movements to reach the same target position based on the parameters of the end effector being used. The coordinate system, or work area, defines the absolute positions when commanding the robot using Cartesian and Trans coordinates. The ImagingLab Robotics Library for DENSO allows the user to set and edit the current tool and work area as well as add new tools and work areas using the VIs shown in the screenshots below. These screenshots show how to set the current tool/work and new values for a specific tool/work, but you can change the input from Set to Get to return the current values and settings.

Next Step

Continue on to the following paper to learn how you can program a single point movement using the ImaginaLab Robotics Library for DENSO.