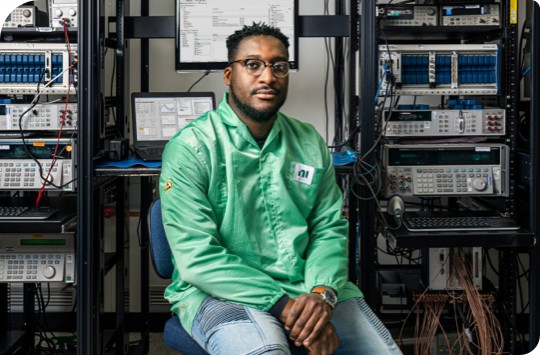

Maximize productivity and reduce costs with NI services. As your trusted partner and expert connector, we’re here to help you Engineer Ambitiously™.

NI’s quarterly Automotive Journal explores the latest testing trends, best practices, and examples of automotive innovation. Read about how we are advancing the future of transportation and accelerating the path to Vision Zero.

Awards

“NI is a pioneer in the development of HIL and VIL testing methodologies. Its ECU test system and battery test system increase flexibility and integrate new technologies to help reduce the development time of autonomous cars and EVs.” — Rohan Joy Thomas, Industry Analyst

Collaboration

Putting our customers’ needs first and elevating the impact of their creativity and innovation is at the heart of how NI works. We’re proud to work with the engineers at top tier 1 suppliers and OEMs to advance the future of mobility.

The ease of setting up the whole system enabled us to deliver world class quality on time and at the right cost with limited resources.

The BMW Group shifted the development of low-voltage power systems toward a virtual approach with a digital twin to identify system design weaknesses earlier.

CATARC created a battery-in-the-loop (BIL) test solution to fill the gap between HIL test and real-vehicle road/site test.

NI Services

Maximize productivity and reduce costs with NI services. As your trusted partner and expert connector, we’re here to help you Engineer Ambitiously™.