Enterprise System Connectivity

Overview

Welcome to the Test Management Software Developers Guide. This guide is collection of white papers designed to help you develop test systems that lower your cost and increase your test throughput and can scale with future requirements. This paper describes the enterprise systems connectivity.

Contents

Introduction

Test management software should include test sequence creation and execution functionality that will be an integral part of your software framework. In addition to these standard test executive abilities, you are likely to want your framework to include connectivity to enterprise systems. The features, functionality, and benefits of enterprise systems and solutions keep your test systems integrated into the larger network of tools and applications used throughout your business. Whether you are integrating source code control tools or data management systems, there can be inherent challenges when integrating multiple software solutions. The connectivity you include in your framework should be based on industry-standard tools and protocols whenever possible to simplify the integration of enterprise systems.

Before going further, what do we mean by “enterprise systems”? Like the examples of source code control or databases, enterprise, in this context, refers to business-wide operations and management-level systems (predominately software-based). As a minimum, enterprise systems are used by more than a single test station or even a single department.

The enterprise systems commonly integrated into a test software framework include tools for configuration management, requirements management, data management, and communicating results. Each of these concepts has associated software tools, which in turn have interfaces for connectivity from your test-specific software. As each of these examples is discussed, the particular interfaces and the benefits of integration will be covered.

Configuration Management

As part of managing your test systems, you are probably keeping track of the specific hardware and software used in the system. This may include the inventory of instruments as well as ensuring the most recent, or most functional, software is installed on the system. These tasks are all part of the range of tasks and responsibilities of configuration management.

Obviously, configuration management involves software developers, hardware technicians, and possibly even purchasing and inventory resources. For software, performing configuration management includes connecting your test software to source code control (SCC) tools as well as managing software deployment and upgrade processes. Given that this discussion is focused on integrating enterprise software in our test framework, we will focus on software configuration management.

Software Configuration Management

Software configuration management is synonymous with source code control, or version control. That is, a key aspect of managing your software configuration is controlling which version of software is installed on the test stations – both the software you have purchased and the software you have developed. In either case, controlling the version controls the features and functionalities available.

Controlling your source code has the added benefit of organizing development efforts, especially when multiple developers are working on a project. There are several providers of source, or version, control software. Microsoft has defined a standard application programming interface (API) for source code control. Source code control systems that use the Microsoft SCC interface make it easier for your test management software to maintain compatibility with several different systems.

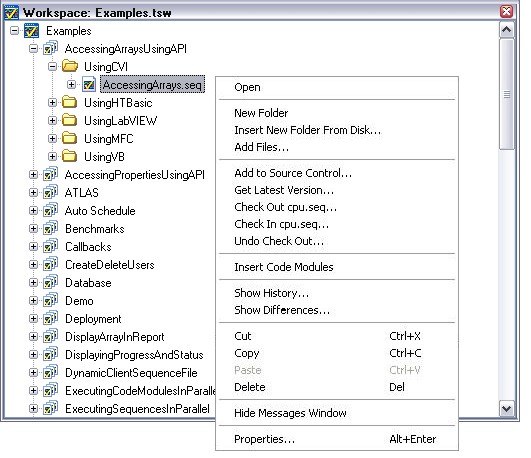

Figure 1: TestStand workspace files provide connectivity to source control tools.

TestStand uses Microsoft SCC to offer source control integration features. In addition to choosing and configuring the SCC provider you use, files can be checked in and out of source code control by using TestStand workspace files. As the name suggests, workspace files can be used to organize your test projects, sequences and measurement code. Additional detail on workspaces and accessing source code control providers from TestStand can be found in the TestStand help file under Workspaces.

Software Deployment

As part of managing your software configuration, you can plan your software deployment strategy. Whether you choose to build an installer or use a fancy network server to distribute your test software, you will want to use care to ensure the integrity of the test stations while at the same time working quickly to keep the upgrade process as seamless as possible.

TestStand includes a deployment utility for distributing your TestStand workspace files. There is more detailed information on deployment in System Deployment Strategies. Additionally, there are NI Partners who develop tools that extend the capabilities of TestStand. To learn more about NI Partners, visit the NI Partner portal.

Upgrading Software

There tends to be a natural concern about upgrading software on deployed test stations. Whether you are upgrading the software you have developed or upgrading a commercial off-the-shelf (COTS) tool, your choice to upgrade will likely be based on incorporating new features or fixing bugs. In both cases, you can plan and prepare for these upgrades to minimize downtime during the transition.

Here are a few of recommendations to ease the upgrade process:

- Use modular components to reduce the impact of an upgrade. With a test system built of modular components, you can replace a particular component to upgrade a feature without replacing the entire system or subsystem. This modularity, applicable to both software and hardware, can protect you from obsolescence.

- Maintain backward compatibility for easy transitions. To be successful using the upgrade strategy of modular components, backward compatibility is a must. For example, if you replace the framework of your test executive, you want to be sure that the upgraded framework will still work with your existing test sequences. As another example, if you upgrade an instrument, the replacement will be easier if that instrument can be installed in the same chassis or rack that contains the rest of the system.

- Use software maintenance options when purchasing COTS tools. In the case of NI software, our subscription software program and service benefits offer the latest NI software technology through automatic upgrades. Additionally, customers have access to technical support from NI engineers.

For information on developing modular code, please refer to Best Practices for Code Module Development.

Requirements Management

Most engineering projects start with high-level specifications, followed by the definition of more detailed specifications as the project progresses. Specifications contain technical and procedural requirements that guide the product through each engineering phase. In addition, working documents, such as hardware schematics, simulation models, software source code, and test specifications and procedures must adhere to and cover the requirements defined by specifications.

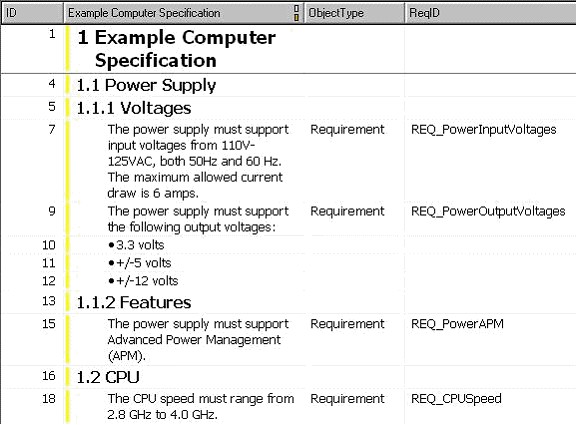

Figure 2: Requirements may be stored in a requirements management tool such as Telelogic DOORS.

These specifications, or requirements, are not just used by your manufacturing or production teams. Many people and processes in an organization use requirements. They may be used in planning and purchasing decisions, reporting your development progress to your customer, guiding software development, ensuring ultimate product completion or project success.

Traceability

Requirements management, then, hinges around tracking the relationships between requirements at different levels of detail and the relationship of requirements to implementation and test. Requirements traceability is simply this ability to track relationships. The benefits of managing requirements include successfully dealing with complexity, ensuring compliance, progress tracking, streamlining development efforts, and having confidence and certainty in your process and product.

Tracking the relationship from requirements to test, measurement, and control software is crucial for validating implementation, analyzing the full impact of changing requirements, and understanding the impact of test failures. To get this level of traceability, you are likely to want to connect your test software framework to the tools you have used to document or manage your requirements.

Requirements Gateway

Requirements Gateway is a requirements traceability solution that links your development and verification documents to formal requirements stored in documents and databases. With Requirements Gateway you can perform coverage and impact analysis, graphically display coverage relationships, and generate comprehensive reports.

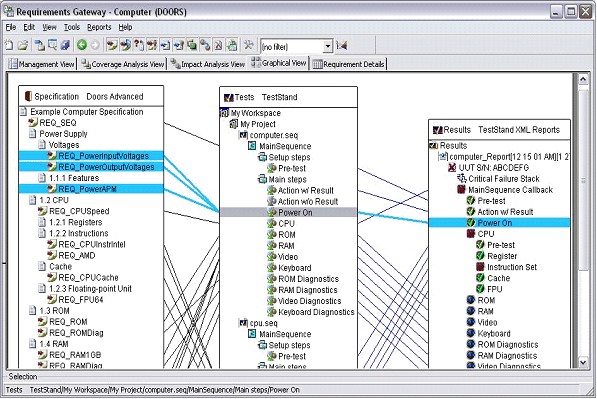

Figure 3: Requirements traceability can be viewed graphically.

The idea behind using a tool such as Requirements Gateway is to connect a specific requirement to the piece of code either where you have implemented the requirements or where you test whether the requirement is being covered. Once the connections are made, you can respond quickly to changes in your requirements or changes in your code. Plus, you can keep track of which requirements still need to be implemented or tested.

For more on linking your requirements to your code, refer to Requirements Gateway for Test, Measurement, and Control Applications.

Data Management

Data management for test systems involves more than logging test results. While result collection is a fundamental component, access to parametric data and data analysis are important features to consider as well. If databases are being used to store test-related information, then the technology for connecting to your database should be chosen wisely.

Database Connectivity

Database connectivity for your test framework will give you the means to dynamically read and write values to and from a database. While there are a variety of ways to interact with your database management system (DBMS), communicating with a database follows five basic steps:

2. Open database tables.

3. Fetch data from and store data to the open database tables.

4. Close the database tables.

5. Disconnect from the database.

(Note: As you may have noticed, these steps describe a session, much like how you use a session when controlling an instrument.)

Structured Query Language (SQL) is used commonly to perform steps 2 through 4. That is, once a connection to the database is created, SQL can select data in tables and read and write from the selected table of data.

TestStand has features to relieve you of being an SQL expert. You can configure database logging through a dialog that walks through configuring your database connection and your schema, a set of SQL commands for mapping TestStand data to your database tables. In addition to the database logging configuration, there are database step types for communicating with your databases directly from your test sequences. There will be more about database logging and the step types in the following sections.

Now, what about steps 1 and 5, i.e. what about connecting and disconnecting from the database? One solution is to use Microsoft ActiveX Data Objects (ADO) or the next generation ADO .NET, which is built on the .NET technology. ADO can be used to connect to databases through Object Linking and Embedding for Databases (OLE-DB) or Open Database Connectivity (ODBC) drivers. Using ADO, you get a standard programming interface to any DBMS that uses ADO.

Database connectivity and results logging with TestStand are discussed at length in the database logging section of the TestStand Help file. This section also gives you introductory material on databases and the technology TestStand uses to communicate with databases.

Logging Results

Though we are in the midst of a discussion on database connectivity, data management does not have to be synonymous with a database management system. Results can certainly be logged to a database, but you may also want results logged to a file.

Results logging is rarely done for its own sake. Granted, you want a historical record of tests that have been run, but you probably want to log results because you want to use them to debug your systems, to analyze productivity or quality, or to generate reports.

There are pros and cons to either using a database or using files. Using data in a database requires some experience with SQL or a DBMS. On the other hand, with the speed of database logging and reading you can get near real-time reports and alerts. File I/O has its own set of challenges such as performance, format, and security. An advantage of logging to a file is the inherent chronological nature of log files. If you experience a system crash, log files are a great asset for examining the cause of such failures.

In the last section, we saw that TestStand has out-of-the-box database logging along with a dialog-based configuration utility. Once configured, you can log all relevant information including the operator’s name, UUT serial number, test parameters, and results. Whether you are building your own database logging features or using the functionality in TestStand, you will build it on top of how you have implemented your database connectivity.

As an aside, the actual database logging functionality is not native to the TestStand Engine or the TestStand Sequence Editor. The default process model included with TestStand contains customizable sequences that implement the logging features.

Refer to Appendix A, Process Model Architecture of the TestStand User Manaul for additional information.

Additional Resources

An NI Partner is a business entity independent from NI and has no agency or joint-venture relationship and does not form part of any business associations with NI.